dotnet in memory database

disclaimer: this is more about experimenting and having fun rather than something can be used in production

Let's prettend we are building an service responsible for player entity.

As usual it will have some basic CRUD and probably few more dedicated endpoints.

in memory collection

Instead of choosing storage upfront we will store this collection in memory which will make it faster than anything else.

Something as simple as:

using System.Collections.Concurrent;

using System.Collections.Generic;

using System.ComponentModel.DataAnnotations;

using Microsoft.AspNetCore.Mvc;

var builder = WebApplication.CreateBuilder(args);

builder.Services.AddControllers();

var app = builder.Build();

app.MapControllers();

app.Run();

public record Player(int Id, string Name, int Age);

[ApiController]

public class PlayersController : ControllerBase

{

private static readonly ConcurrentDictionary<int, Player> _players = new ConcurrentDictionary<int, Player>();

[HttpGet("/players/count")]

public ActionResult<long> Count() => _players.Count();

[HttpGet("/players")]

public ActionResult<IEnumerable<Player>> Read([FromQuery][Range(1, 100)] int take = 100, [FromQuery] int skip = 0)

{

return Ok(_players.Values.OrderBy(p => p.Id).Skip(skip).Take(take));

}

[HttpGet("/players/young")]

public ActionResult<IEnumerable<Player>> Young([FromQuery][Range(1, 100)] int take = 100, [FromQuery] int skip = 0)

{

return Ok(_players.Values.Where(p => p.Age <= 21).OrderBy(p => p.Id).Skip(skip).Take(take));

}

[HttpPost("/players")]

public ActionResult<Player> Save(Player player)

{

if (player.Id == 0)

{

player = player with { Id = 1 + _players.Keys.DefaultIfEmpty().Max() };

}

_players[player.Id] = player;

return Ok(player);

}

[HttpDelete("/players/{id}")]

public ActionResult Delete(int id)

{

var found = _players.Values.FirstOrDefault(p => p.Id == id);

if (found != null)

{

_players.Remove(found.Id, out var removed);

}

return NoContent();

}

}and give it a try:

curl -X POST http://localhost:5000/players -H 'Content-Type: application/json' -d '{"name": "Michael", "age": 8}'

# {"id":1,"name":"Michael","age":8}

curl -X POST http://localhost:5000/players -H 'Content-Type: application/json' -d '{"name": "Kira", "age": 5}'

# {"id":2,"name":"Kira","age":5}

curl http://localhost:5000/players/count

# 2

curl http://localhost:5000/players

# [{"id":1,"name":"Michael","age":8},{"id":2,"name":"Kira","age":5}]Yes ofcourse you may complain that if collection is too big it may not fit into memory but the same is true for any other database and then it does not really matter which server we will scale - one with database or one with this service.

persistency - snapshot

Ok, but how about persistency - we will store whole collection in new line delimited json (ndjson) snapshot file which will act as backup and will be readed on application start.

Each time we will create such snapshot it name will contain timestamp so it will act as a natural backup.

using System.Collections;

using System.Collections.Concurrent;

using System.Collections.Generic;

using System.ComponentModel.DataAnnotations;

using System.Text;

using System.Text.Json;

using Microsoft.AspNetCore.Mvc;

var builder = WebApplication.CreateBuilder(args);

builder.Services.AddControllers();

builder.Services.AddSingleton<Players>();

var app = builder.Build();

app.MapControllers();

app.Run();

public record Player(int Id, string Name, int Age);

[ApiController]

public class PlayersController : ControllerBase

{

private readonly Players _players;

public PlayersController(Players players)

{

_players = players;

}

[HttpGet("/players/count")]

public ActionResult<long> Count() => _players.Count();

[HttpGet("/players")]

public ActionResult<IEnumerable<Player>> Read([FromQuery][Range(1, 100)] int take = 100, [FromQuery] int skip = 0)

{

return Ok(_players.OrderBy(p => p.Id).Skip(skip).Take(take));

}

[HttpGet("/players/young")]

public ActionResult<IEnumerable<Player>> Young([FromQuery][Range(1, 100)] int take = 100, [FromQuery] int skip = 0)

{

return Ok(_players.Where(p => p.Age <= 21).OrderBy(p => p.Id).Skip(skip).Take(take));

}

[HttpPost("/players")]

public ActionResult<Player> Save(Player player)

{

var saved = _players.Save(player);

return Ok(saved);

}

[HttpDelete("/players/{id}")]

public ActionResult Delete(int id)

{

var found = _players.FirstOrDefault(p => p.Id == id);

if (found != null)

{

_players.Remove(found);

}

return NoContent();

}

[HttpGet("/players/snapshot")]

public async Task<ActionResult<string>> Snapshot()

{

await _players.Snapshot();

return Ok("DONE, i'm for internal use and exposed just for demo");

}

}

public class Players: IEnumerable<Player>

{

private const string StoragePath = "/Users/mac/Desktop/Walley/";

private const string FileNamePrefix = "players";

private static readonly ConcurrentDictionary<int, Player> _players = new ConcurrentDictionary<int, Player>();

private readonly ILogger<Players> _logger;

public Players(ILogger<Players> logger)

{

_logger = logger;

var ts = DateTimeOffset.UtcNow.ToUnixTimeMilliseconds();

var lastSnapshot = Directory.EnumerateFiles(StoragePath, $"{FileNamePrefix}.*.snapshot").MaxBy(x => x);

if (lastSnapshot != null)

{

_logger.LogInformation("SNAPSHOT {LastSnapshot}", lastSnapshot);

foreach(var l in File.ReadLines(lastSnapshot))

{

if (string.IsNullOrEmpty(l))

{

continue;

}

try

{

var player = JsonSerializer.Deserialize<Player>(l);

if (player == null)

{

continue;

}

_players[player.Id] = player;

// _logger.LogInformation("<<<<<<<<< {player}", player);

}

catch (Exception ex)

{

_logger.LogWarning(ex, "unable to read '{L}'", l);

}

}

}

}

public IEnumerator<Player> GetEnumerator() => _players.Values.GetEnumerator();

IEnumerator IEnumerable.GetEnumerator() => GetEnumerator();

public Player Save(Player player)

{

if (player.Id == 0)

{

player = player with { Id = 1 + _players.Keys.DefaultIfEmpty().Max() };

}

_players[player.Id] = player;

return player;

}

public bool Remove(Player player)

{

var result = _players.Remove(player.Id, out var removed);

return result;

}

public async Task Snapshot()

{

var ts = DateTimeOffset.UtcNow.ToUnixTimeMilliseconds();

var p = Path.Join(StoragePath, $"{FileNamePrefix}.{ts}.snapping");

var nl = new ReadOnlyMemory<byte>(Encoding.ASCII.GetBytes("\n"));

await using var fileStream = File.Create(p);

await using var buffered = new BufferedStream(fileStream);

foreach (var player in _players.Values)

{

await JsonSerializer.SerializeAsync(buffered, player);

await buffered.WriteAsync(nl);

}

await buffered.DisposeAsync();

await fileStream.DisposeAsync();

File.Move(p, p.Replace(".snapping", ".snapshot"));

}

}and give it a try:

curl -X POST http://localhost:5000/players -H 'Content-Type: application/json' -d '{"name": "Michael", "age": 8}'

# {"id":1,"name":"Michael","age":8}

curl -X POST http://localhost:5000/players -H 'Content-Type: application/json' -d '{"name": "Kira", "age": 5}'

# {"id":2,"name":"Kira","age":5}

curl http://localhost:5000/players/count

# 2

curl http://localhost:5000/players/snapshot

# DONE, i'm for internal use and exposed just for demothen try to restart app and call players count endpoint to see if data will be recovered

persistency - WAL

to make everything more reliable and safe we are going to introduce something similar to write ahead log

how it will work:

internaly, whenever we are modifying collection we will put an event to queue and there will be dedicated thread to flush this queue to disk

log on a file system will also be simple new line delimited json with log of operations, like:

{"op":"save","el":{"id":1,"name":"Michael","age":8},"ts":1667288155171}

{"op":"save","el":{"id":2,"name":"Kira","age":5},"ts":1667288155171}whenever app start it will not only read last snapshot but also all available logs that were made after

also while creating snapshot we will start new log, so even in case when something changed while we were writing to disk we wont lose this

the only decision here is it should by sync or async, in my case, it is a demo and not banking system, thats why everything is done in background

so here is intermediate result

using System.Collections;

using System.Collections.Concurrent;

using System.ComponentModel.DataAnnotations;

using System.Text;

using System.Text.Json;

using Microsoft.AspNetCore.Mvc;

var builder = WebApplication.CreateBuilder(args);

builder.Services.AddControllers();

builder.Services.AddSingleton<Players>();

builder.Services.AddHostedService(p => p.GetRequiredService<Players>());

var app = builder.Build();

app.MapControllers();

app.Run();

public record Player(int Id, string Name, int Age);

[ApiController]

public class PlayersController : ControllerBase

{

private readonly Players _players;

public PlayersController(Players players)

{

_players = players;

}

[HttpGet("/players/count")]

public ActionResult<long> Count() => _players.Count();

[HttpGet("/players")]

public ActionResult<IEnumerable<Player>> Read([FromQuery][Range(1, 100)] int take = 100, [FromQuery] int skip = 0)

{

return Ok(_players.OrderBy(p => p.Id).Skip(skip).Take(take));

}

[HttpGet("/players/young")]

public ActionResult<IEnumerable<Player>> Young([FromQuery][Range(1, 100)] int take = 100, [FromQuery] int skip = 0)

{

return Ok(_players.Where(p => p.Age <= 21).OrderBy(p => p.Id).Skip(skip).Take(take));

}

[HttpPost("/players")]

public ActionResult<Player> Save(Player player)

{

var saved = _players.Save(player);

return Ok(saved);

}

[HttpDelete("/players/{id}")]

public ActionResult Delete(int id)

{

var found = _players.FirstOrDefault(p => p.Id == id);

if (found != null)

{

_players.Remove(found);

}

return NoContent();

}

[HttpGet("/players/snapshot")]

public async Task<ActionResult<string>> Snapshot()

{

await _players.Snapshot();

return Ok("DONE, i'm for internal use and exposed just for demo");

}

}

public class Players: BackgroundService, IEnumerable<Player>

{

private const string StoragePath = "/Users/mac/Desktop/Walley/";

private const string FileNamePrefix = "players";

private static readonly ConcurrentDictionary<int, Player> _players = new ConcurrentDictionary<int, Player>();

private readonly ConcurrentQueue<Log> _queue = new();

private readonly SemaphoreSlim _semaphore = new(0);

private readonly ILogger<Players> _logger;

private string _log;

public Players(ILogger<Players> logger)

{

_logger = logger;

var ts = DateTimeOffset.UtcNow.ToUnixTimeMilliseconds();

_log = Path.Join(StoragePath, $"{FileNamePrefix}.{ts}.log");

var lastSnapshot = Directory.EnumerateFiles(StoragePath, $"{FileNamePrefix}.*.snapshot").MaxBy(x => x);

if (lastSnapshot != null)

{

_logger.LogInformation("SNAPSHOT {LastSnapshot}", lastSnapshot);

foreach(var l in File.ReadLines(lastSnapshot))

{

if (string.IsNullOrEmpty(l))

{

continue;

}

try

{

var player = JsonSerializer.Deserialize<Player>(l);

if (player == null)

{

continue;

}

_players[player.Id] = player;

// _logger.LogInformation("<<<<<<<<< {player}", player);

}

catch (Exception ex)

{

_logger.LogWarning(ex, "unable to read '{L}'", l);

}

}

}

var readLogsStartingFrom = string.IsNullOrEmpty(lastSnapshot) ? -1 : long.Parse(lastSnapshot.Split('.')[1]);

foreach (var p in Directory.EnumerateFiles(StoragePath, $"{FileNamePrefix}.*.log").Where(x => long.Parse(x.Split('.')[1]) >= readLogsStartingFrom).OrderBy(x => x))

{

_logger.LogInformation("WAL {P}", p);

foreach (var l in File.ReadLines(p))

{

var log = JsonSerializer.Deserialize<Log>(l);

if (log == null)

{

continue;

}

switch (log.op)

{

case "save":

_players[log.el.Id] = log.el;

break;

case "remove" when _players.ContainsKey(log.el.Id):

_players.Remove(log.el.Id, out _);

break;

}

_logger.LogInformation("WAL applied {Log}", log);

}

}

}

public IEnumerator<Player> GetEnumerator() => _players.Values.GetEnumerator();

IEnumerator IEnumerable.GetEnumerator() => GetEnumerator();

public Player Save(Player player)

{

if (player.Id == 0)

{

player = player with { Id = 1 + _players.Keys.DefaultIfEmpty().Max() };

}

Enqueue("save", player);

_players[player.Id] = player;

return player;

}

public bool Remove(Player player)

{

var result = _players.Remove(player.Id, out var removed);

if (result)

{

Enqueue("remove", removed ?? player);

}

return result;

}

public async Task Snapshot()

{

var ts = DateTimeOffset.UtcNow.ToUnixTimeMilliseconds();

_log = Path.Join(StoragePath, $"{FileNamePrefix}.{ts}.log"); // from now on we are going to write to the new log file, even in case of failure it does not matter because we are reading all logs greater than last snapshot

var p = Path.Join(StoragePath, $"{FileNamePrefix}.{ts}.snapping");

var nl = new ReadOnlyMemory<byte>(Encoding.ASCII.GetBytes("\n"));

await using var fileStream = File.Create(p);

await using var buffered = new BufferedStream(fileStream);

foreach (var player in _players.Values)

{

await JsonSerializer.SerializeAsync(buffered, player);

await buffered.WriteAsync(nl);

}

await buffered.DisposeAsync();

await fileStream.DisposeAsync();

File.Move(p, p.Replace(".snapping", ".snapshot"));

}

private void Enqueue(string op, Player player)

{

_queue.Enqueue(new Log(op, player, DateTimeOffset.UtcNow.ToUnixTimeMilliseconds()));

_semaphore.Release();

}

private record Log(string op, Player el, long ts);

protected override async Task ExecuteAsync(CancellationToken stoppingToken)

{

_logger.LogInformation("ExecuteAsync called");

await _semaphore.WaitAsync(stoppingToken);

_logger.LogInformation("Semaphore released");

while (!stoppingToken.IsCancellationRequested)

{

if (!_queue.TryDequeue(out var result) || result == null) continue;

await File.AppendAllTextAsync(_log, JsonSerializer.Serialize(result) + "\n", stoppingToken);

_logger.LogInformation("Logged {Result}", result);

}

}

}and now we can add few more players, remove them, call snapshot, do something else and we should see all data being logged

so from now on our service not only really fast but also persistent

and we do not even need to write sql or something else its plain linq

the only thing that should be added here probably is to handle broken files, imagine scenario when only half of line were written to disk and everything were powered off

but because all our storage it plain text it should be pretty easy to handle

also we have natural backups so we do need to bother even better we need cleanup unwanted snapshots and logs

bonus - query language

in case if we do not want to write endpoint for all possible scenarious we may even do tricks like this:

[HttpGet("/players/demo")]

public async Task<ActionResult<IEnumerable<Player>>> ReadDemo([FromQuery] string? where = null)

{

if (string.IsNullOrEmpty(where))

{

return Ok(_players);

}

// dotnet add package Microsoft.CodeAnalysis.CSharp.Scripting

var func = await CSharpScript.EvaluateAsync<Func<Player, bool>>(where, ScriptOptions.Default.WithReferences(typeof(Player).Assembly));

return Ok(_players.Where(func));

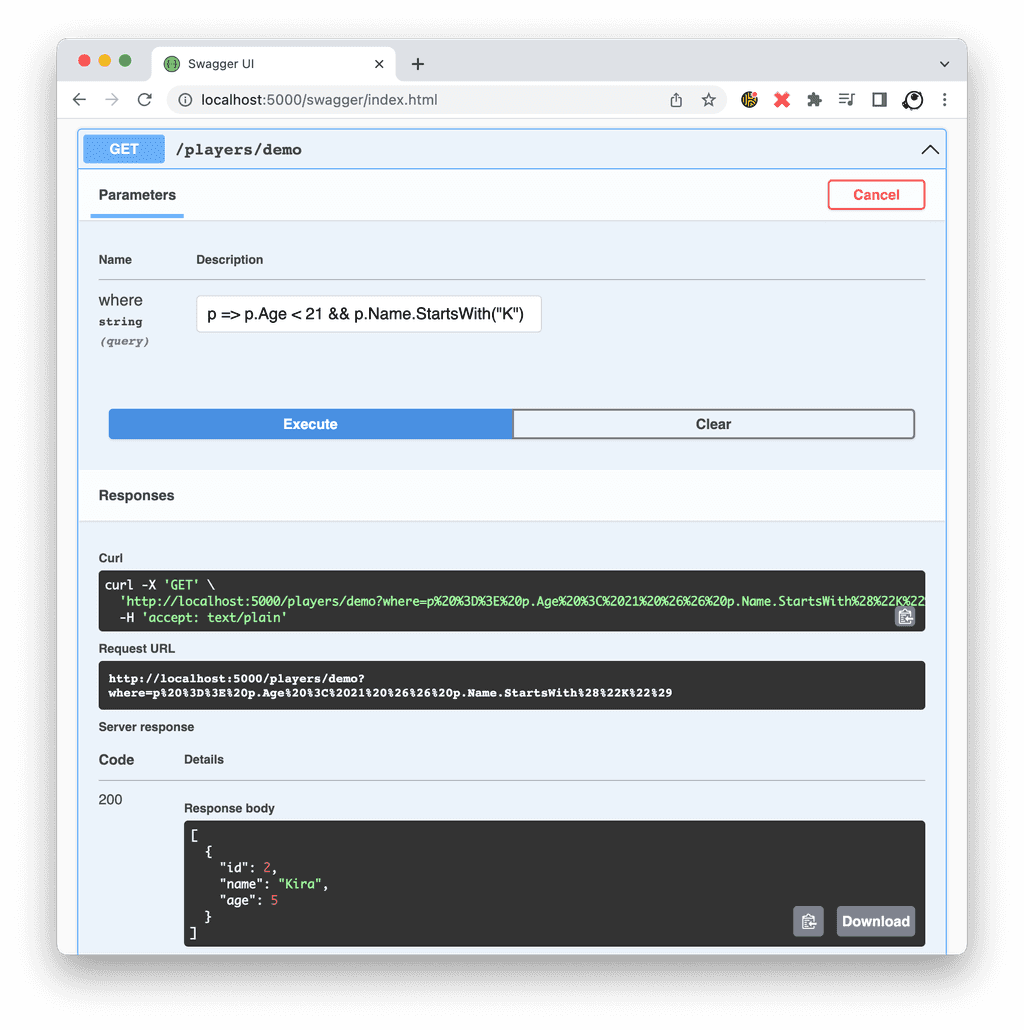

}which will allow us to pass expressions to our collection, aka:

curl 'http://localhost:5000/players?where=p => p.Age > 21 && p.Name.StartsWith("A")'i did not encoded it for it to be readable but here is screenshot from swagger so it will be more meaninghfull

for performance you may want to cache compiled functions somewhere

and to prevent some sensetive data you always may add one more where clause after users to filter further

consideration - sharding

Personaly I do not see any problem about in memory collection - it is a key for performance and we already solved persistence problem

To solve problem about huge collection we should care not about memory size but rather about sharding

Idea here is to have cluster of this services being deployed somewhere

They somehow need to know about each other

No matter which node will receive request it should handle it or proxy to destination

Also we gonna need hashing algorythm to decide which node should store which records

For queries we are going to run them on all nodes and combine results together

But I do not see any reason for all that complexity because can not imagine so big collection which wont fit into currently available virtual machines

The only exception is high availability but it may be achieved by mirror so we may just mirror all incomming requests to two instances or we can build something on our own by reading logs from main server