HTTP2 headers compression, sockets usage, reverse proxy

Trying to figure out basic things around HTTP2 with set of examples that will show how it works and what benefits it has

HTTP2 header compression

With help of http compression we may dramatically reduce traffic and costs

But there is even more improvement that might be done

HTTP2 alongside all other benefits will compress request and response headers and will do it always

To demonstrate this we will have following example: simple nginx server (there is no need to create an sample app), that serves "Hello World" web page plus one tousand of bytes in headers

We are going to run such nginx for good old http and moder http2 and compare results in our browser

As well in nginx logs (unfortunately I did not found a way to get header only bytes in nginx logs as well as request body size to perform checks from curl, but still I do believe that request headers are compressed same way as well)

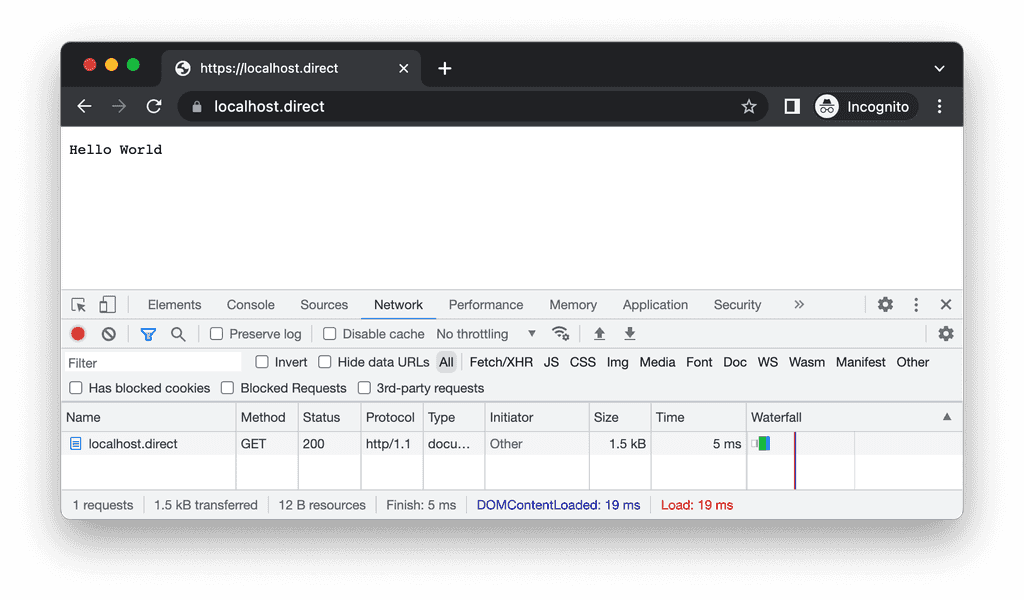

HTTP1.1

server {

listen 80;

listen 443 ssl;

server_name localhost.direct;

ssl_certificate localhost.direct.crt;

ssl_certificate_key localhost.direct.key;

location / {

# root /usr/share/nginx/html;

# index index.html index.htm;

add_header Content-Type text/plain;

add_header X-Hundred-Bytes-0 12345678090123456780901234567809012345678090123456780901234567809012345678090123456780901234567809012345678090;

add_header X-Hundred-Bytes-1 12345678090123456780901234567809012345678090123456780901234567809012345678090123456780901234567809012345678090;

add_header X-Hundred-Bytes-2 12345678090123456780901234567809012345678090123456780901234567809012345678090123456780901234567809012345678090;

add_header X-Hundred-Bytes-3 12345678090123456780901234567809012345678090123456780901234567809012345678090123456780901234567809012345678090;

add_header X-Hundred-Bytes-4 12345678090123456780901234567809012345678090123456780901234567809012345678090123456780901234567809012345678090;

add_header X-Hundred-Bytes-5 12345678090123456780901234567809012345678090123456780901234567809012345678090123456780901234567809012345678090;

add_header X-Hundred-Bytes-6 12345678090123456780901234567809012345678090123456780901234567809012345678090123456780901234567809012345678090;

add_header X-Hundred-Bytes-7 12345678090123456780901234567809012345678090123456780901234567809012345678090123456780901234567809012345678090;

add_header X-Hundred-Bytes-8 12345678090123456780901234567809012345678090123456780901234567809012345678090123456780901234567809012345678090;

add_header X-Hundred-Bytes-9 12345678090123456780901234567809012345678090123456780901234567809012345678090123456780901234567809012345678090;

return 200 'Hello World\n';

}

}docker run -it --rm -p 80:80 -p 443:443 -v $PWD/localhost.direct.crt:/etc/nginx/localhost.direct.crt -v $PWD/localhost.direct.key:/etc/nginx/localhost.direct.key -v $PWD/http.conf:/etc/nginx/conf.d/default.conf -v $PWD/nginx.conf:/etc/nginx/nginx.conf nginx| protocol | size |

|---|---|

| http1.1 | 1.5kb |

request "GET / HTTP/1.1", 754 bytes received, 1510 bytes sentand the curl attempt:

curl -s -o /dev/null \

-H 'X-Bytes-1: 12345678901234567890123456789012345678901234567890123456789012345678901234567890' \

-H 'X-Bytes-2: 12345678901234567890123456789012345678901234567890123456789012345678901234567890' \

-H 'X-Bytes-3: 12345678901234567890123456789012345678901234567890123456789012345678901234567890' \

-H 'X-Bytes-4: 12345678901234567890123456789012345678901234567890123456789012345678901234567890' \

-H 'X-Bytes-5: 12345678901234567890123456789012345678901234567890123456789012345678901234567890' \

-H 'X-Bytes-6: 12345678901234567890123456789012345678901234567890123456789012345678901234567890' \

-H 'X-Bytes-7: 12345678901234567890123456789012345678901234567890123456789012345678901234567890' \

-H 'X-Bytes-8: 12345678901234567890123456789012345678901234567890123456789012345678901234567890' \

-H 'X-Bytes-9: 12345678901234567890123456789012345678901234567890123456789012345678901234567890' \

-H 'X-Bytes-0: 12345678901234567890123456789012345678901234567890123456789012345678901234567890' \

https://localhost.direct/ -w 'version: %{http_version}\nbody: %{size_download}\nheaders: %{size_header}\n'will output:

version: 1.1

body: 12

headers: 1498and from nginx side it will be:

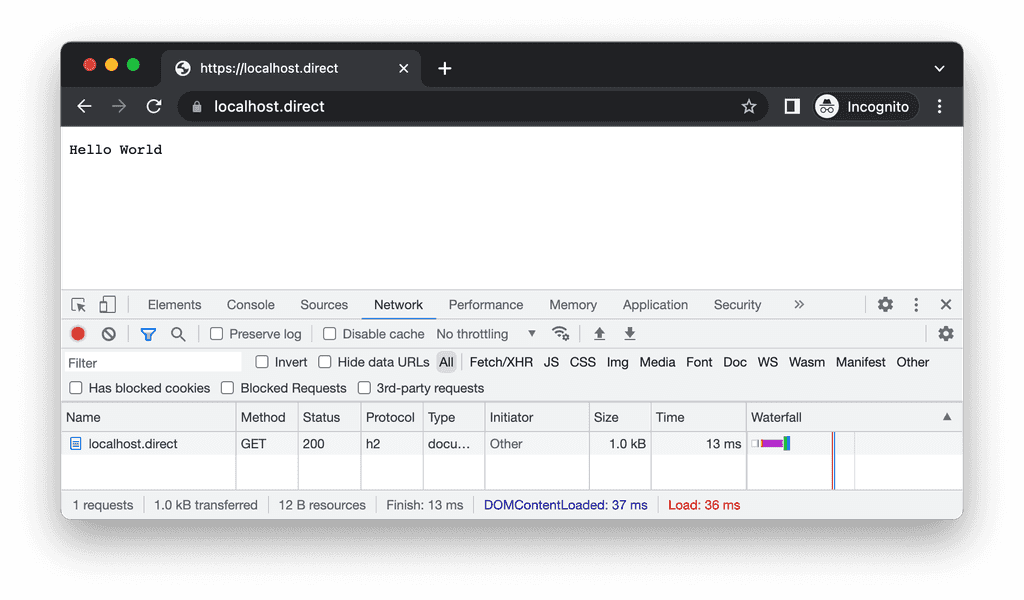

request "GET / HTTP/1.1", 1010 bytes received, 1510 bytes sentHTTP2

For HTTP2 to work SSL (HTTPS) is required, thankfully there is an localhost.direct project which will be really helpfull here, I have just realized that I have been using it in HTTP example but want to leave everything as is, because this one does prove that TLS does not change anything here

server {

listen 80;

listen 443 ssl http2;

server_name localhost.direct;

ssl_certificate localhost.direct.crt;

ssl_certificate_key localhost.direct.key;

location / {

# root /usr/share/nginx/html;

# index index.html index.htm;

add_header Content-Type text/plain;

add_header X-Hundred-Bytes-0 12345678090123456780901234567809012345678090123456780901234567809012345678090123456780901234567809012345678090;

add_header X-Hundred-Bytes-1 12345678090123456780901234567809012345678090123456780901234567809012345678090123456780901234567809012345678090;

add_header X-Hundred-Bytes-2 12345678090123456780901234567809012345678090123456780901234567809012345678090123456780901234567809012345678090;

add_header X-Hundred-Bytes-3 12345678090123456780901234567809012345678090123456780901234567809012345678090123456780901234567809012345678090;

add_header X-Hundred-Bytes-4 12345678090123456780901234567809012345678090123456780901234567809012345678090123456780901234567809012345678090;

add_header X-Hundred-Bytes-5 12345678090123456780901234567809012345678090123456780901234567809012345678090123456780901234567809012345678090;

add_header X-Hundred-Bytes-6 12345678090123456780901234567809012345678090123456780901234567809012345678090123456780901234567809012345678090;

add_header X-Hundred-Bytes-7 12345678090123456780901234567809012345678090123456780901234567809012345678090123456780901234567809012345678090;

add_header X-Hundred-Bytes-8 12345678090123456780901234567809012345678090123456780901234567809012345678090123456780901234567809012345678090;

add_header X-Hundred-Bytes-9 12345678090123456780901234567809012345678090123456780901234567809012345678090123456780901234567809012345678090;

return 200 'Hello World\n';

}

}docker run -it --rm -p 80:80 -p 443:443 -v $PWD/localhost.direct.crt:/etc/nginx/localhost.direct.crt -v $PWD/localhost.direct.key:/etc/nginx/localhost.direct.key -v $PWD/http2.conf:/etc/nginx/conf.d/default.conf -v $PWD/nginx.conf:/etc/nginx/nginx.conf nginx| protocol | size |

|---|---|

| http2 | 1.0kb |

request "GET / HTTP/2.0", 506 bytes received, 1048 bytes sentand the same curl attempt will give us:

version: 2

body: 12

headers: 1470and in nginx logs

request "GET / HTTP/2.0", 701 bytes received, 1047 bytes sentNotes:

- the most important one - http2 does not compress bodies (in both cases response body is 12 bytes), everything we did in http compression is still applicable and wanted

- http2 compress request and response headers out of the box without the need to do something special from both client and server sides

- curl headers size report seems to be broken (has almost no difference in both examples)

So if you have bazillion of cookies (all kinds of remarketing, facebook, jwt tokens and so on) enabling http2 may be noticeable

HTTP2 sockets usage

The second benefit of HTTP2 that it reuses the same socket to transfer files between the client and the server

In this demo we have small web app with single page that loads ten javascript files and each of them prints its hello message

The output of html will be something like:

demo

hello /echo/say1

hello /echo/say2

hello /echo/say3

hello /echo/say4

hello /echo/say5

hello /echo/say6

hello /echo/say7

hello /echo/say8

hello /echo/say9

hello /echo/say0Nothing fancy here, but it will allow us to see what happens underneath

To calculate incomming connections I used an sample found here

Here is an app:

package main

import (

"fmt"

"net"

"net/http"

"os"

)

func main() {

router := http.NewServeMux()

router.HandleFunc("/", func(w http.ResponseWriter, r *http.Request) {

fmt.Printf("%s %s\n", r.Proto, r.URL.Path)

w.Header().Set("content-type", "text/html")

w.Write([]byte(`

<!DOCTYPE html>

<html>

<head>

<title>demo</title>

</head>

<body>

<h1>demo</h1>

<script src="/echo/say1"></script>

<script src="/echo/say2"></script>

<script src="/echo/say3"></script>

<script src="/echo/say4"></script>

<script src="/echo/say5"></script>

<script src="/echo/say6"></script>

<script src="/echo/say7"></script>

<script src="/echo/say8"></script>

<script src="/echo/say9"></script>

<script src="/echo/say0"></script>

</body>

</html>

`))

})

router.HandleFunc("/echo/", func(w http.ResponseWriter, r *http.Request) {

fmt.Printf("%s %s\n", r.Proto, r.URL.Path)

w.Header().Set("content-type", "application/javascript")

w.Write([]byte(fmt.Sprintf("document.write('hello %s<br>')", r.URL.Path)))

})

s := &http.Server{

ConnState: func(c net.Conn, cs http.ConnState) {

if cs == http.StateNew {

fmt.Println("GOT NEW TCP CONNECTION") // https://stackoverflow.com/questions/51317122/how-to-get-number-of-idle-and-active-connections-in-go

}

},

Handler: router,

}

if os.Args[1] == "http" {

listener, _ := net.Listen("tcp", ":80")

fmt.Println("open http://localhost/")

s.Serve(listener)

}

if os.Args[1] == "http2" {

listener, _ := net.Listen("tcp", ":443")

fmt.Println("open https://localhost.direct/")

s.ServeTLS(listener, "localhost.direct.crt", "localhost.direct.key")

}

}HTTP

docker run -it --rm -p 80:80 -v ${PWD}/main.go:/code/main.go -w /code golang go run main.go httpThen open http://localhost/ (feel free to change ports if needed)

In the container logs you will see:

GOT NEW TCP CONNECTION

GOT NEW TCP CONNECTION

GOT NEW TCP CONNECTION

GOT NEW TCP CONNECTION

GOT NEW TCP CONNECTION

GOT NEW TCP CONNECTIONNote: here we can see as well of how browser tries to optimize socket connection used, and limits number of connections to our backend in this example to six connections only

HTTP2

docker run -it --rm -p 443:443 -v ${PWD}/main.go:/code/main.go -v ${PWD}/localhost.direct.crt:/code/localhost.direct.crt -v ${PWD}/localhost.direct.key:/code/localhost.direct.key -w /code golang go run main.go http2Once gain, ports may be changed here

And in container logs you will see only one

GOT NEW TCP CONNECTIONWhich is the key difference that we wanted to see/feel/catch

HTTP2 behind reverse proxy

After few attempts it seems that it is not possible at the moment O_o

Here is what I have ended up

Idea was to run previous example in http2 mode being proxied by nginx

server {

listen 80;

listen 443 ssl http2;

server_name localhost.direct;

ssl_certificate localhost.direct.crt;

ssl_certificate_key localhost.direct.key;

# location / {

# proxy_pass https://http.localhost.direct;

# }

# With such config, even so from client side we see HTTP2, on proxied service logs we see that requests are HTTP1.1

location / {

proxy_pass https://http2.localhost.direct:443;

}

}# docker run -it --rm --name=http --ip=172.17.0.80 -v ${PWD}/main.go:/code/main.go -w /code golang go run main.go http

# docker run -it --rm --name=http2 --ip=172.17.0.43 -v ${PWD}/main.go:/code/main.go -v ${PWD}/localhost.direct.crt:/code/localhost.direct.crt -v ${PWD}/localhost.direct.key:/code/localhost.direct.key -w /code golang go run main.go http2

docker run -it --rm --name=http2 -v ${PWD}/main.go:/code/main.go -v ${PWD}/localhost.direct.crt:/code/localhost.direct.crt -v ${PWD}/localhost.direct.key:/code/localhost.direct.key -w /code golang go run main.go http2Notes:

- we are not exposing container ports by intent, requests will be made via reverse proxy

- i was not able to set fixed ip address in my case

docker inspect http2did always show172.17.0.2so i just hardcoded it instead off passing--ip=172.17.0.43to docker run command

And run our nginx:

docker run -it --rm -p 443:443 --link=http2 --add-host=http2.localhost.direct:172.17.0.2 -v $PWD/localhost.direct.crt:/etc/nginx/localhost.direct.crt -v $PWD/localhost.direct.key:/etc/nginx/localhost.direct.key -v $PWD/proxy.conf:/etc/nginx/conf.d/default.conf -v $PWD/nginx.conf:/etc/nginx/nginx.conf nginxAnd from the browser we see expected http2 but from the backend service that is sill old protocol

Can not say if it is good or bat, at the very end in majority of the cases both backend and proxy will be sitting side by side in a private network and all this should not be a real problem

Later on, when we had our examples of https and http2 in dotnet and nodejs it seems like nodejs will serve only http2 so I did tried following:

const {createSecureServer} = require('http2')

const {readFileSync} = require('fs')

const server = createSecureServer({

key: readFileSync('localhost.direct.key'),

cert: readFileSync('localhost.direct.crt')

})

server.listen(443)

server.on('error', (err) => console.dir(err))

server.on('request', (req, res) => {

res.writeHead(200)

res.end('hello world\n')

})docker run -it --rm --name=http2 -v ${PWD}/main.js:/code/main.js -v ${PWD}/localhost.direct.crt:/code/localhost.direct.crt -v ${PWD}/localhost.direct.key:/code/localhost.direct.key -w /code node node main.jsThen run the same nginx as previously and retrieved an error in browser

Missing ALPN Protocol, expected `h2` to be available.

If this is a HTTP request: The server was not configured with the `allowHTTP1` option or a listener for the `unknownProtocol` event.Which makes me believe all this HTTP2 story is good only for edge and if your are sitting somewhere behind Cloudflare who is using(ed) nginx, it would not make much sense

Here is an quotation from nginx

HTTPS and HTTP2 in dotnet

HTTP2 in dotnet is enabled by default starting from 3.1 or 5.0

do not remember correct version but at moment of writing actual version is 7.0 so it should just work out of the box

there is no need to change anything from application side the only thing we need to do is to add to our appsetting.json following:

{

"Kestrel": {

"Endpoints": {

"Http": {

"Url": "http://dotnet.localhost.direct"

},

"Https": {

"Url": "https://dotnet.localhost.direct",

"Certificate": {

"Path": "localhost.direct.crt",

"KeyPath": "localhost.direct.key"

}

}

}

}

}notes:

- you sill can use ports, e.g.:

"Url": "https://dotnet.localhost.direct:50001", - you are not required to add both http and https and can add only one

- you may want to use url like

"Url": "https://0.0.0.0",

HTTPS and HTTP2 in node

Here is an example of raw https server without any dependencies

const https = require('https')

const fs = require('fs')

function handler(_, res) {

res.writeHead(200)

res.end('hello world\n')

}

const server = https.createServer({

key: fs.readFileSync('localhost.direct.key', 'utf8'),

cert: fs.readFileSync('localhost.direct.crt', 'utf8')

}, handler)

server.listen(443)and here is one for expresjs

const express = require('express')

const fs = require('fs')

const http = require('http')

const https = require('https')

const app = express()

app.get('/', (_, res) => res.send('Hello World'))

// app.listen(4000) // instead of this do following:

const httpServer = http.createServer(app)

const httpsServer = https.createServer({

key: fs.readFileSync('localhost.direct.key', 'utf8'),

cert: fs.readFileSync('localhost.direct.crt', 'utf8')

}, app)

httpServer.listen(80)

httpsServer.listen(443)But for HTTP2 we need something else

const {createSecureServer} = require('http2')

const {readFileSync} = require('fs')

const server = createSecureServer({

key: readFileSync('localhost.direct.key'),

cert: readFileSync('localhost.direct.crt')

})

server.listen(443)

server.on('error', (err) => console.dir(err))

server.on('request', (req, res) => {

res.writeHead(200)

res.end('hello world\n')

})the interesting fact for last example here if we will run:

curl https://localhost.direct/Everything will work as expected (underneath, both our server and curl will agree to talk http2), but if we wun:

curl https://localhost.direct/ --http1.1We will receive an error: "Unknown ALPN Protocol, expected h2 to be available."

Which was used in previous section to check how it will behave behind proxy

HTTP2 Server Push

Another interesting concept is HTTP2 Server Push

Idea was to build an small app that will serve index.html

Then browser will download style.css which will be served for 2 seconds

After that, because of styles browser will recognize that it does need an image as well and will download it which will also take 2 seconds

So in total page load will take aprox 4 seconds

But with help of push, we should be able to push both styles and image alongside index.html

Here is sample app:

package main

import (

"fmt"

"log"

"net"

"net/http"

"os"

"time"

)

func main() {

router := http.NewServeMux()

router.HandleFunc("/style.css", func(w http.ResponseWriter, r *http.Request) {

fmt.Printf("%s %s\n", r.Proto, r.URL.Path)

time.Sleep(2 * time.Second)

b, _ := os.ReadFile("style.css")

w.Header().Set("content-type", "text/css")

w.Write(b)

})

router.HandleFunc("/sc2.jpeg", func(w http.ResponseWriter, r *http.Request) {

fmt.Printf("%s %s\n", r.Proto, r.URL.Path)

time.Sleep(2 * time.Second)

b, _ := os.ReadFile("sc2.jpeg")

w.Header().Set("content-type", "image/jpeg")

w.Write(b)

})

router.HandleFunc("/", func(w http.ResponseWriter, r *http.Request) {

fmt.Printf("%s %s\n", r.Proto, r.URL.Path)

if pusher, ok := w.(http.Pusher); ok {

fmt.Println("PUSH IS SUPPORTED")

if err := pusher.Push("/style.css", nil); err != nil {

log.Printf("Failed to push: %v", err)

}

if err := pusher.Push("/sc2.jpeg", nil); err != nil {

log.Printf("Failed to push: %v", err)

}

}

b, _ := os.ReadFile("index.html")

w.Header().Set("content-type", "text/html")

w.Write(b)

})

s := &http.Server{

ConnState: func(c net.Conn, cs http.ConnState) {

if cs == http.StateNew {

fmt.Println("GOT NEW TCP CONNECTION") // https://stackoverflow.com/questions/51317122/how-to-get-number-of-idle-and-active-connections-in-go

}

},

Handler: router,

}

if os.Args[1] == "http" {

listener, _ := net.Listen("tcp", ":80")

fmt.Println("open http://localhost/")

s.Serve(listener)

}

if os.Args[1] == "http2" {

listener, _ := net.Listen("tcp", ":443")

fmt.Println("open https://localhost.direct/")

s.ServeTLS(listener, "localhost.direct.crt", "localhost.direct.key")

}

}But here we have real strange things

In Safari it does indeed works, at least overall load time is around 2 seconds (aka both requests for styles and image were made in parallel underneath)

But in Chrome it does not work anymore, and go complains "Failed to push: feature not supported"

And when I was trying to figure out how to do the same in dotnet found this answer

So it seems like HTTP2 Server Push feature is dead

Conclusions

http2 may reduce traffic even more, also it will utilize less socket connections

but it does make sence only for publicly available services

in case if your service is behind nginx/ingress all you need to do is enable http2 in nginx/ingress and there is no need to do anything to your app because nginx will use old http behind the scene, but for client it will serve http2 - at least we will reduce traffic outside our network

if you are behind reverse proxy like cloudflare then probaly it does not make much sense in general, cloudflare was made on top of nginx (ok, they have rewritten it and created their own proxy but still this rules probably are applied)